Realistic virtual environments help optimize experiments in many scientific fields.

Even today, we are far from having explored all processes that keep the highly complex “human machine” running. Especially the workings of the brain and sensory perception still puzzle researchers. Just recently, David Julius and Ardem Patapoutian were awarded the Nobel Prize for Medicine for the discovery of temperature and pressure receptors. This shows that even basic functionalities of the human body are not fully explored.

Another example are noisy environments such as railway stations. How is it possible for people to focus on conversing with another person even though their ears register all ambient sounds? This question falls into the research area “Auditory Perception”, which investigates the processes the brain uses to interpret the world of sound. It also looks into the perception of complex sounds (which is crucial to process speech, music, and environmental sounds) as well as into the brain areas required for this. Until now, it has been hard to simulate chaotic environments such as train stations in a controlled laboratory setting. Researchers have used Virtual Reality (VR) technology to support them in researching this field for some time.

In recent years, considerable progress has been made in understanding auditory cognitive processes and abilities. Using simple virtual environments in experiments has helped a lot. They are easy to control, but, unfortunately, often not very realistic. Recent developments in VR technologies have made the environments very lifelike, which does away with many limitations of earlier laboratory environments. For example, Interactive Virtual Environments (IVEs) help understand the auditory perception of complex audiovisual situations. They can be used to model acoustically unfavorable situations such as the train station described at the beginning, but also noisy classrooms and crowded open-plan offices.

How to evaluate the virtual?

A compelling virtual environment makes the technology transparent, and people can interact naturally with the virtual world. But how does one evaluate the quality of IVEs, and which reference variables are relevant for this? In classical audio and video coding, quality control is comparatively simple: the common practice is the direct comparison of coded and non-coded signals. The user focuses on a specific aspect (such as sound or picture quality) and switches back and forth between the original and the signal they want to evaluate. Differences are quickly noticed with this approach. This method is often not suitable for IVEs. Since these are multimodal and even allow movement in the virtual scene, users automatically pay attention to more than one aspect (sound, image, movement). This multimodal sensory stimulation has significant effects on attention and quality assessment.

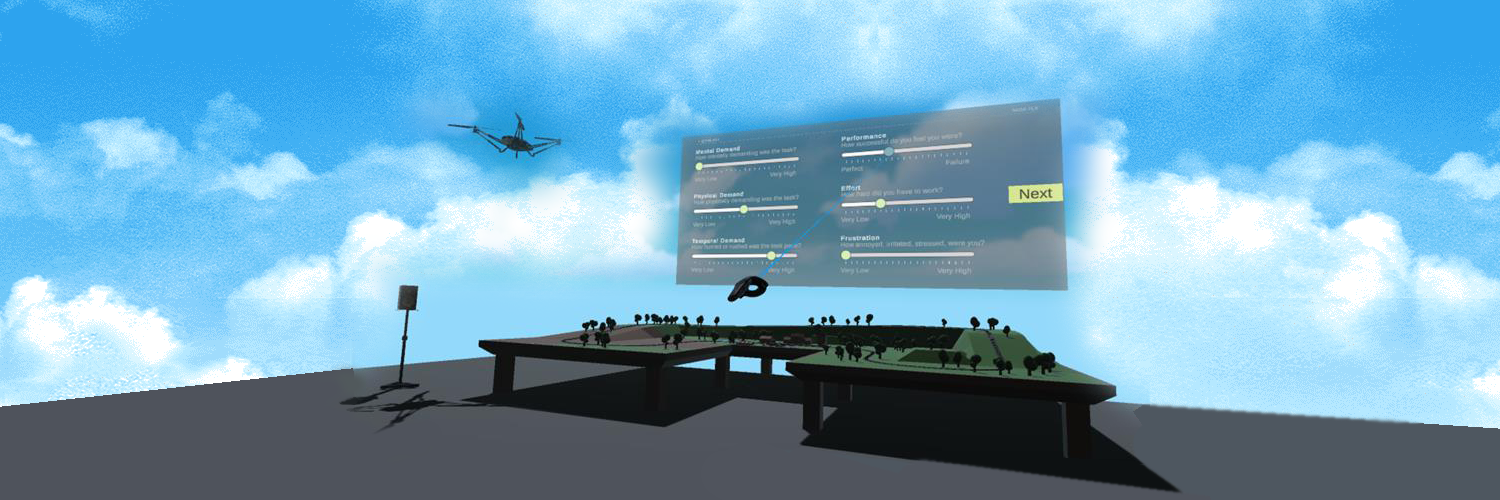

To find and close such gaps in methodology, the project “Quality of Experience Evaluation of Interactive Virtual Environments with Audiovisual Scenes” (QoEVAVE), was launched. It is funded by the German Research Foundation (DFG) and also investigates whether it is possible to infer the quality of an IVE from people’s behavior in it. The project builds on insights from Quality-of-Experience (QoE) research and extends them with approaches from the VR domain to develop the first comprehensive QoE framework for IVEs. The goal is to provide an integrated view of the perception of IVE quality as a cognitive process and of cognitive performance on specific tasks as a feature of the quality of an IVE.

Joining forces to advance science

Prof. Dr. ir. Emanuël Habets from the International Audio Laboratories (AudioLabs) Erlangen and Prof. Dr.-Ing. Alexander Raake from TU Ilmenau are leading the research in the QoEVAVE project. Their teams are working in a multi-step process on the developing and testing of a method to evaluate the quality of IVEs. The evaluation has to work both unimodally (only one stimulus – audio or video – is evaluated at a time) and multimodally (audio and video are evaluated simultaneously). The first step is to devise a unimodal test procedure. For this purpose, a 360-degree image and sound recording of an everyday situation is made. The media professionals of the TU Ilmenau are responsible for the video recording, while the audio experts of the AudioLabs take care of the audio recording (with an em32 Eigenmike) and the binaural rendering of the recordings. In this scene, subjects can only move their heads (3 Degrees of Freedom, 3DoF). Due to the real video recording and the limited movement possibilities, the test subjects can focus on either the audio or the video quality. The final stage of the project is the evaluation in a completely virtual scene in which one can move freely (6DoF). An exciting question here is, for example, whether the test subjects evaluate the same audio quality differently in the two scenes. Common evaluation methods, such as a MUSHRA test, are not directly applicable to the evaluation of IVEs. Therefore, the researchers from Erlangen and Ilmenau are advancing and adapting existing evaluation methods to virtual environments.

QoEVAVE is part of the 3-year DFG priority program AUDICTIVE (Auditory Cognition in Interactive Virtual Environments, http://www.spp2236-audictive.de), which started in January 2021. AUDICTIVE brings together researchers from acoustics, cognitive psychology, and computer science / Virtual Reality. The goal is a more accurate understanding of human auditory perception and cognition using interactive virtual environments. Ultimately, all disciplines should benefit from the joint development of the IVEs described above.